Understanding Business Value of MLOps

Table of Contents

The simplest way to grasp this concept is to envision constructing a robot that learns to play chess or identify animals in images. This is what machine learning entails — instructing computers to learn from data.

However, creating that robot is merely the beginning. What if you desire it to continually enhance its intelligence every week? You wish for it to operate on numerous computers globally? You want to ensure it is not making errors?

This is where MLOps becomes relevant. Consider MLOps as akin to a school routine: You rise, prepare yourself, attend classes, acquire knowledge, review, take examinations, and improve. MLOps facilitates ML models in doing the same — learning, enhancing, and performing their tasks more effectively over time, automatically!

Introduction to Machine Learning Operations

MLOps represents the application of DevOps principles—such as automation, continuous integration/delivery (CI/CD), and monitoring—to the machine learning lifecycle. It guarantees that ML models are developed, tested, deployed, monitored, and updated in a scalable, reproducible, and efficient manner.

Think of MLOps as the bridge between experimental machine learning and production-ready AI systems that can scale across your organization while maintaining reliability and performance.

Key Components of MLOps

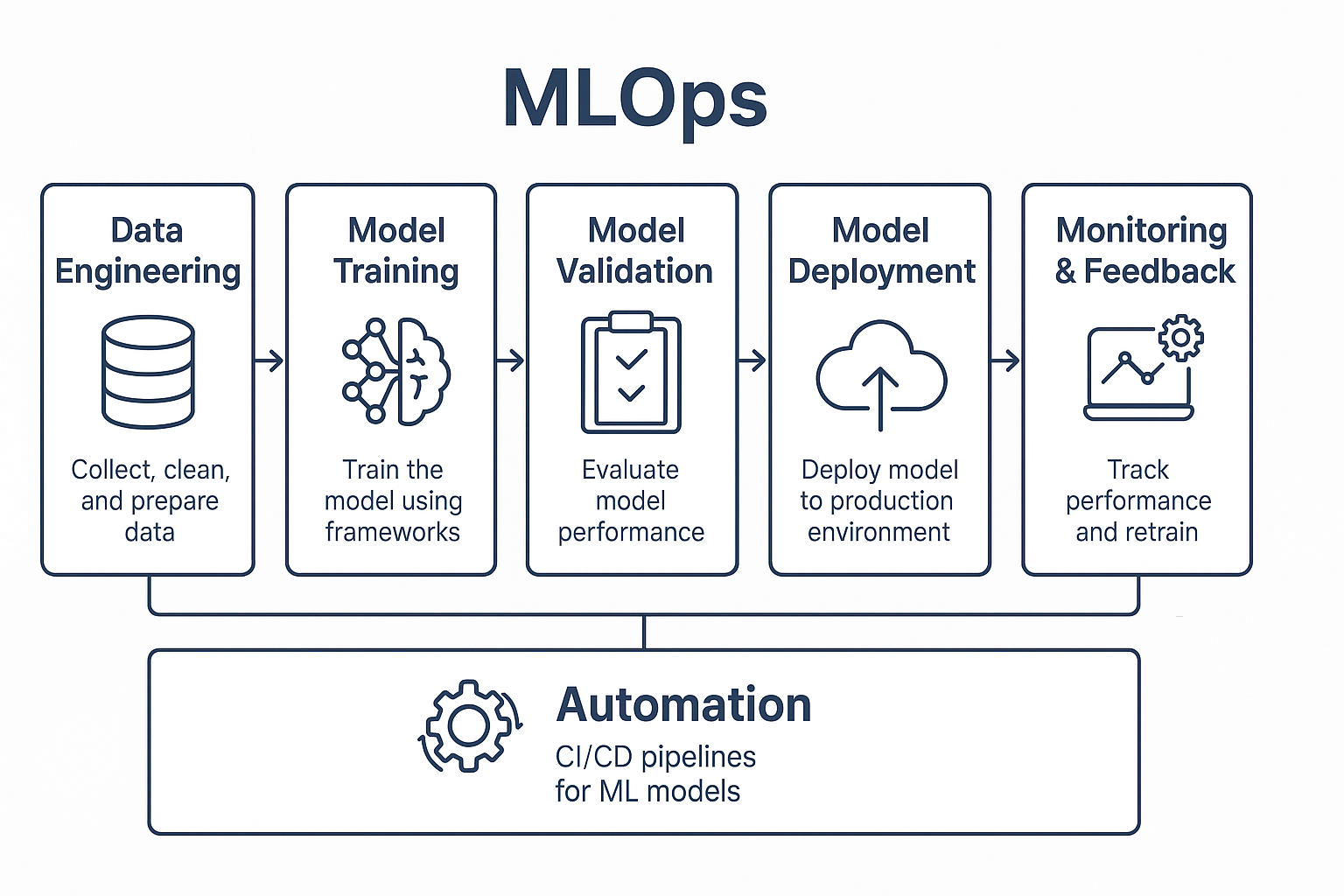

The MLOps lifecycle encompasses several critical stages that ensure smooth operation of machine learning systems:

| Stage | Description |

|---|---|

| Data Engineering | Collect, clean, and prepare the data used for training. |

| Model Training | Use frameworks like TensorFlow, PyTorch, or SageMaker to train the model. |

| Model Validation | Test how well the model performs using metrics (e.g., accuracy, F1-score). |

| Model Deployment | Deploy the model into a production environment (e.g., using REST APIs). |

| Monitoring & Feedback | Track performance, drift, and retrain when needed. |

| Automation | Use CI/CD pipelines for ML models (CodePipeline, CodeBuild, etc.) |

Key components of the MLOps lifecycle

key components of MLOps

MLOps Tools: AWS vs Open Source

Organizations have various options when implementing MLOps, ranging from cloud-native solutions to open-source tools. Here's a comprehensive comparison:

| Stage | AWS Services | Open Source Services |

|---|---|---|

| Data & Version | Amazon S3, AWS Glue, Data Version Control(DVC) | Pandas, Airflow |

| Training | SageMaker, EC2 GPU, CodeBuild | TensorFlow, PyTorch, Scikit-learn |

| Deployment | SageMaker Endpoint, Lambda, ECS | Flask, FastAPI, Docker, Kubernetes |

| CI/CD | CodePipeline, CodeDeploy, Step Functions | Jenkins, GitHub Actions, MLflow |

| Monitoring | CloudWatch, SageMaker Model Monitor | Prometheus, Evidently AI |

Comparison of AWS and Open Source MLOps tools

Why MLOps is Beneficial for Business

MLOps delivers tangible business value across multiple dimensions, transforming how organizations leverage machine learning for competitive advantage.

1. Quicker Product Launch

- Value: Accelerates the delivery of products and features through machine learning

- How: Streamlines training, testing, and deployment processes

- Impact: Minimizes manual transitions and boosts innovation speed

2. Enhanced Scalability Across Teams and Models

- Value: Enables the management of numerous ML models across various departments

- How: Utilizes centralized pipelines and reusable components

- Impact: Facilitates collaboration among multiple teams and encourages model reuse

3. Cost Efficiency

- Value: Maximizes resource efficiency in cloud-based training and inference

- How: Implements auto-scaling, makes use of spot instances, and schedules training sessions

- Impact: Improves the utilization of GPU and CPU resources

4. Auditability and Compliance

- Value: Guarantees that models adhere to regulations and are interpretable

- How: Monitors data, model, and code lineage, while logging and versioning

- Impact: Complies with regulatory requirements (GDPR, HIPAA, etc.)

5. Enhanced Model Performance

- Value: Improves customer satisfaction through more accurate models

- How: Ongoing evaluation and retraining with updated data

- Impact: Minimizes model drift and ensures consistency

6. Business Risk Mitigation

- Value: Identifies failing or biased models at an early stage

- How: Real-time monitoring, notifications, and A/B testing of models

- Impact: Averts poor decisions or public relations issues

Why MLOps is Important for Customers Deploying ML on Cloud

Cloud deployment brings unique advantages and challenges that MLOps addresses effectively:

1. Leveraging Cloud Resources for Scalability

Utilize cloud services (such as AWS or GCP) for flexible training and inference. For instance, AWS SageMaker and GCP Vertex AI can provide auto-scaling endpoints.

2. Continuous Integration and Deployment for Machine Learning Models

Apply existing DevOps tools (like AWS CodePipeline and GitHub Actions) to manage ML workflows.

3. Version Control for Models

Maintain records of data, code, and models through MLflow, DVC, or SageMaker Model Registry.

4. Performance Monitoring

Employ tools such as SageMaker Model Monitor or Prometheus/Grafana to keep track of performance and detect data drift.

5. Models Governance

Ensure an integrated audit trail and model explainability (for example, using SHAP, LIME, or SageMaker Clarify).

6. Connection with Business Applications

Implement models as APIs within real-time applications (such as those for fraud detection or recommendations).

Summary

Consider MLOps as the equivalent of DevOps for Machine Learning. Similar to how DevOps has changed the landscape of software delivery, MLOps is reshaping how organizations implement AI on a large scale — providing flexibility, oversight, and immediate insights.

By implementing MLOps practices, organizations can transform their machine learning initiatives from experimental projects into reliable, scalable systems that drive real business value. The investment in MLOps infrastructure and processes pays dividends through faster deployment cycles, improved model performance, and reduced operational risks.

About the Author

S. Ranjan is a leading researcher in technology and innovation. With extensive experience in cloud architecture, AI integration, and modern development practices, our team continues to push the boundaries of what's possible in technology.